Greeting everyone, time to jump into Part II of our assessment of lossy Bluetooth music transmission.

In Part I, we examined the use of an Android 10 device (Huawei P30 Pro) as audio transmitter showing the differences between the codecs as played back with the AIYIMA A08 PRO amplifier and its Qualcomm QCC5125 Bluetooth SoC. Please refer to that article for details about the methodology and comparison with the output from a high resolution Topping desktop DAC.

For this Part II, let's focus on the Advanced Audio Coding (AAC) codec which has become a very popular option. Other than the universal default SBC, AAC is probably the most common one for music transmission on account of the fact that the "elephant in the room" - Apple - uses this across its product lines as their standard codec running at 256kbps. Given the amount of use, this is basically a practical standard for quality music transmission over Bluetooth.

Given the broad range of computers/tablets/phones used among family members here, when I'm looking for wireless headphones, I would want to make sure the device supports AAC; probably more so than aptX or LDAC.

Note that there is actually a "family" of AAC profiles from the early Low Complexity AAC (LC-AAC) originating in 1997 up to later versions like Extended High Efficiency AAC (xHE-AAC) released in 2012. As end users, we're generally not privy to such details so I'll just use the generic term "AAC" in this article.

Android 10 & 13:

As you may recall from Part I, the Huawei P30 Pro (2019) phone performed poorly using AAC, here are the graphs again:

Clearly this level of performance is not impressive compared to even SBC in the tests! And as discussed with Mikhail in the comments, the encoding appears to be software-based rather than offloaded to specialized SoC hardware (the Huawei uses HiSilicon's KIRIN 980 SoC).

Using the same test parameters as last time, the question then is, can newer versions of Android perform better? Well, I have here a Samsung Galaxy Tab S6 Lite from 2020. It's running Android 13, based on the Exynos 9611 SoC:

Apple's iPhone AAC:

Computers - Windows 11 MiniPC and macOS laptop:

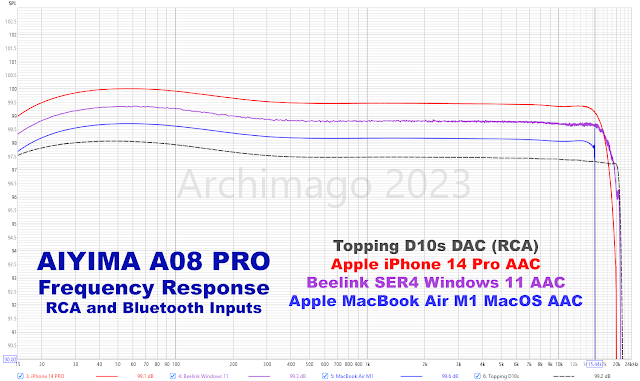

Frequency Response:

Time for frequency response curves comparing the devices - starting with the Android and iPhones. Notice that I'll include the desktop Topping D10s DAC for comparison.Summary:

Bravo Apple for getting AAC encoding quality done right on the iPhones. My assumption is that the iPhone is using hardware-assisted encoding to achieve this.

The quality we see using the iPhones with AAC 256kbps is better than aptX-HD previously tested and at least equivalent to the higher bitrate LDAC >900kbps on Android. There's certainly something to be said about Apple focusing on a single standard and doing it well!

Unfortunately, AAC encoding is not as good on macOS and the frequency response isn't as extended - out to just shy of 15.5kHz, a disappointment for audiophiles, I'm sure. :-|

Looking around, I see that Fraunhofer's software is embedded in Android, Windows, and macOS (see here and here) so perhaps that explains the similar qualitative limitations among these devices.

While none of my Android devices performed well on the AAC codec tests, obviously there are many Android models based on all kinds of hardware out there. Maybe the Qualcomm Snapdragon SoC can handle AAC better than what I'm showing here. But then again, Qualcomm would likely be focusing their energies on optimizing aptX performance. Regardless, I hope these published results can raise the bar for Android audio subsystem developers.

The Apple iPhone has shown us that AAC's sound quality can be objectively excellent with great compression ratio at 256kbps. I'm sure that this is done with higher computational load. Also, AAC will tend to impose longer latencies. I've seen sites like this stating that AAC latency is around 60ms (this is just the encoder latency, with Bluetooth transmission and wireless headphone variables, >150ms is common). With music-only playback, latency should not be a problem. Likewise, video players can apply latency compensation to reduce issues like poor lip sync. Realtime interactive gamers however might want to look at codecs like aptX-LL specifically built to minimize temporal lag. Nice to see latency of the Apple Airpods already improving over the generations.

Looking beyond what we have today, maybe Android can do to AAC what they're already offering with LDAC - different bitrate settings in the "Developer options". For example, I wonder whether there are any compatibility issues if higher quality "AAC 320kbps" is offered to headphones and soundbars for those who just want to listen to music or watch videos (with latency compensation), knowing that this higher bitrate setting (above 256kbps) will use more processing and lengthen latency.

As I said last week, I think stuff like the Japan Audio Society's certification for "Hi-Res Audio Wireless" is silly and just to create hype. IMO, Apple's iPhone AAC implementation sounds and performs just as good as much higher bitrate LDAC but it'll never get that "Hi-Res Audio" certification since it's 16-bits and doesn't support higher sampling rates. Let's ignore these rather meaningless numerical specs for lossy encoding. There's no question that AAC can achieve "High Quality Wireless" sound when implemented well.

Wow... Almost September! Life's going to get busy here over the next few weeks.

Hope you're enjoying the music, audiophiles.

You cannot jump over the Apple Eco Fence without repercussions.

ReplyDeleteIndeed Stephen,

DeleteThat's what my son is realizing now when he got an iPhone 14 from a family member to upgrade to from his Android. ;-)

I just don't like that I can't plug in the USB cable and transfer music and images into a normal file system.

Hi Arch,

ReplyDeleteThanks for that review, good to know the constraints of Bluetooth codecs. On my Motorola phone under Android 11 (moto g power), the choice seems determined by the connected device: All options are there but grayed out in Developer mode when nothing is connected. When I connect my Sennheiser Momentum TW 2, the only choice I get is Qualcomm aptX 16/44.1 so 325 kbps, all others remain greyed out. Good enough for lossy I guess. I guess if i had an Apple earbud I would be stuck with a bad AAC implementation…

Yeah Gilles,

DeleteProbably true if you had Apple AirPods and forced to use AAC.

Definitely room to improve with the BT codecs. How much consumers care and whether subgroups like audiophiles would push for better quality I suspect will need to be seen. I do hope Android at least can improve and achieve parity with the Apple iPhone though!

Archimago, thanks again for doing all this work!

ReplyDeleteRegarding BT latency for gaming scenarios, I know that many people use apps that force their headset / mobile device to use communications-oriented BT profiles like HSP https://en.wikipedia.org/wiki/List_of_Bluetooth_profiles#Headset_Profile_(HSP) over the SCO link that usually have lower latency than A2DP by design. Everyone hopes that newer low latency protocols should solve this problem without compromising audio quality.

Cool thanks for the info Mikhail,

DeleteLong time since I looked into this so had a quick peek at the HSP profile and SCO link.

Yikes. 64kbps CVSD (8kHz sampling rate) or mSBC (16kHz sampling) codecs definitely isn't going to cut it for decent music playback. ;-) But yeah, should have good latency...

Folks who want to have a listen to the quality of these codecs Mikhail is talking about, check this page out:

https://www.redpill-linpro.com/techblog/2021/05/31/better-bluetooth-headset-audio-with-msbc.html

BTW, there is "yet another" independent evaluation of different implementation of AAC codecs, also naming Apple's implementation as the most "transparent": https://hydrogenaud.io/index.php?topic=120062.0

ReplyDeleteNice stuff, and that was only 128kbps AAC!

DeleteArchimago, and regarding the "lossy hi-res audio" thing from Part I. It is true that this combination of words does not make sense if we interpret "hi-res audio" as "more resolution than a 1644 CD format, suitable for further processing"—this is the understanding of "hi-res" by mastering engineers and such. I think, if we instead think of "lossy hi-res" codecs as ones that are able to work with higher dynamic range and then produce a better lossy result, this makes more sense. For example, remember the "mastered for iTunes" program which required studios to submit 24-bit material for lossy AAC distribution that iTunes used back in time.

ReplyDeleteFor customers who just look at labels, this might make sense, too—if you play a lossless hi-res audio from your NAS or a streaming service, it seems logical when listening to it to use BT headphones that have "lossy hi-res" sticker on them.

Yup, definitely important to not have lossy decode → lossy encode for that extra processing!

DeleteWhile we can talk about and think about these meanings, I suspect that the whole point of the JAS logo is still a marketing scheme aimed at the general public who probably don't grasp the more nuanced background...

Clearly the JAS isn't adding nuance to the discussion by using the same yellow/golden "Hi-Res Audio" logo with the only difference being "Wireless" underneath; seemingly implying that there's some direct correlation between these codecs and the truly "Hi-Res Audio" hardware like DACs that this sticker is also slapped on.

Oh well. It is what it is, as usual, "buyer beware" of the psychology behind the marketing.

I'd love to hear your thoughts on the monetization strategies of apps like Getupside and how they compare to the Bluetooth audio codec technologies discussed in your blog post. It's intriguing to see how technology and business intersect in our rapidly changing digital landscape.

ReplyDeleteHow Does Getupside Make Money

I found this comparison of Bluetooth headphones and earbuds really interesting, and it got me thinking about how technology has evolved in recent years.

ReplyDeletecan you buy gas with walmart gift card

Great to see some proper tests done on Bluetooth codecs. I've always been curious about them, but could never get such clarity anywhere else. Thanks!

ReplyDeleteWhile you have cleared up the encoder side of the equation pretty much, with iOS+AAC coming out on top, what role does the decoder at the receiving end play in all this? Does AAC support on the receiving end guarantee the highest quality Bluetooth playback if you have an iOS device?

I ask because on 2 of my Bluetooth speakers (Creative Sound Blaster Roar Pro, and Roar 2, both of which support AAC and aptX), Android+aptX sounds a lot better than iOS+AAC. On these 2 speakers, aptX just has more clarity, detail and well defined high-end. Since I can connect 2 Bluetooth devices at once to them, I could test quite easily - I played the same song on both Android and iOS, just paused one device, and played the other immediately after - and yes the difference was quite significant and noticeable. AAC sounds rather muffled compared to aptX. I guess this would imply that my Roar speakers haven't implemented AAC properly? And your Aiyima A08 Pro has? So would you say the decoder side of things can also mess things up?

Aside from the above 2 speakers, I have several other Bluetooth speakers, some with AAC support, some just SBC, and for all those on the whole I'd say Bluetooth audio through iOS sounds better than Android, even when it's just doing SBC.

try to turn on the "Disable A2DP Hardware Offload" option in the developers settings and run the test again. It should be improve the quality.

ReplyDeleteI’ve noticed that the quality of the AAC codec depends on the SoC rather than the Android version or the brand of the device.

ReplyDeleteI have a Motorola Edge 50 Pro with a Snapdragon 7 Gen 3, and the connection with my car uses AAC at 256Kbps, with a cutoff at 16KHz.

My brother has an Edge 40 with a MediaTek SoC, and it seems there is no frequency cutoff at all. My ears can clearly hear up to 18.5KHz, and when generating a tone from the Edge 40, it sounds perfect.

The sound quality is also much superior on the Edge 40 with MediaTek, even with old headphones that work with AAC at 128Kbps. On the Snapdragon, compression artifacts are very noticeable, while on the MediaTek, it sounds perfect.

Nellykini specializes in crafting exclusive, handmade swimwear featuring vibrant colors, diverse fabrics, and intricate designs. Explore a wide range of styles by visiting our Zurich , as our complete collection is not featured on our website. phone cases

ReplyDelete