I don't have an MQA-capable DAC myself (and honestly owning one is not high on my list of priorities), but a friend does happen to have the Mytek Brooklyn which is fully MQA-native and has the ability to decode all the way to 24/384. Furthermore, he has the use of a professional ADC of fantastic quality - the RME Fireface to make some recordings of the output from the DAC.

|

| Image from Mytek. Obviously very capable DAC! |

1. Can we show that hardware-decoded MQA is closer to an original signal beyond the 88/96kHz decoding already done in software?

2. Can we compare the hardware decoder with the output from the software decoder? How much difference is there between the two?

Procedure:

As you can imagine, in order to best objectively assess hardware decoding of MQA beyond 88/96kHz which the TIDAL software is able to do, we would have to start with a track that is "true" high resolution with ultrasonic content and low noise floor. We would also want to have access to the original native resolution >96kHz file and on TIDAL so we can compare to pure software decoding. To "capture" the sound, we can use the same DAC and record the track in the actual original resolution, then have TIDAL software decode and record that, and finally record the DAC output using the "full" hardware/firmware MQA decoding from the DAC.Because of these requirements, my friend and I decided on using the freely available 2L track "Blågutten" from the Hoff Ensemble's Quiet Winter Night available on the 2L demo page as well as on blu-ray. As you can see on the demo page, the original DXD (24/352.8) file is available and would represent the "purest" and "original" resolution for the DAC which can be used as reference. Recall that a similar technique was used last year when I looked at the Meridian Explorer 2 DAC.

There are a few caveats to note however which may or may not be significant when these recordings were done.

1. The RME Fireface 802 ADC used to capture the DAC output is capable of up to 24/192kHz. Therefore, although we can playback the "original resolution" 24/352 DXD and the MQA hardware decoding is of the same claimed resolution from the Mytek Brooklyn, all comparisons will be done at most at 192kHz samplerate.

2. The measurements are taken from the Brooklyn's XLR balanced outputs for lowest noise and best resolution. However, to not overload the ADC, the Brooklyn XLR was jumpered to +4V and -5dB volume. On the Fireface ADC side, sensitivity for XLR input was set to +4dBu. See settings below:

|

| Mytek Brooklyn settings - notice running firmware 2.21. (Latest firmware at publication is 2.22 which was only a display bug fix and should not impact the sound.) |

|

| RME Fireface 802 settings & mixer. |

Alright then, with these formalities out of the way, let's analyze the results...

Results:

Unlike my previous post comparing hi-res downloads to MQA decoding on TIDAL sourced directly from a digital rip, remember that these tests are dependent on the quality of the ADC/DAC used; this is of course why I spent time above describing the procedure. As such, the signal to some extent will show limitations of the DAC/ADC process including noise floor characteristics which I will try to point out where I can.My friend was extremely meticulous with these recordings. For the purpose of answering the basic questions posed above, I'm going to start with making some comparisons between 4 primary recordings:

A. Brooklyn DAC playing the original 24/352.8 "DXD" track - "Reference"

B. Brooklyn DAC playing the Tidal software MQA decoded version - "Software MQA"

C. Brooklyn DAC playing as a native hardware/firmware MQA decoder - "Hardware MQA"

D. Brooklyn DAC playing the Software decode then further hardware/firmware MQA decode - "Soft-Hard MQA"

Notice the "Soft-Hard MQA" recording. According to my friend, the Brooklyn DAC will recognize this partially decoded version from TIDAL in 88kHz (MQA adspeak calls this the "MQA Core" output) and then the DAC will decode and presumably upsample internally all the way to 24/352.8. The MQA indicator on the Brooklyn turns red (normally only blue and green) when it does this.

One by one then, let's try to answer a few questions... Note that I subjectively listened to each track so I already had my own impressions, but to really help you appreciate what I "heard", let us objectively run Audio DiffMaker for each comparison to actually show you just what the "residual" difference is over the first 30 seconds of the music. I think it is only in this way that we can understand the magnitude of similarity or difference in the sound even if subjectively you're just going to have to listen for yourself.

For the record, here is the DiffMaker setting used - essentially the default settings:

1. How much difference was there between the "Reference" playback and "Software MQA" from TIDAL?

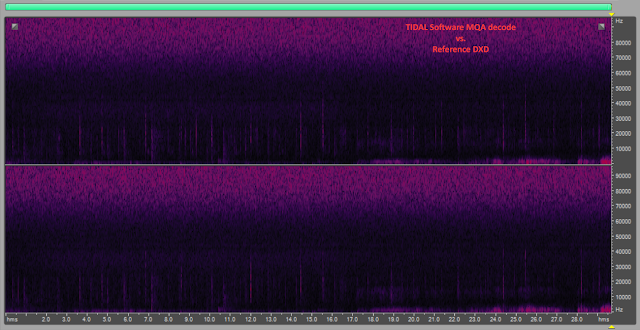

Here's the spectral frequency plot of the "difference":

As you can see, below around 60kHz in the spectral frequency plot, there's really very little difference! What this tells us is as I described last time, indeed, MQA "works" as advertised by creating high-resolution output from the MQA file to a certain extent. Even more interesting is that the amplitude of the difference between the actual DXD playback and the software MQA decode is averaging around -70dB RMS right off the bat! Folks this level of difference is low especially considering that most of it is just the ultrasonic noise above 60kHz!

For the moment, I hope we can all agree that noise above 60kHz isn't necessarily desirable nor audible so I'll come back to it later. So let's ignore that ultrasonic noise and downsample the 192kHz file to 96kHz (iZotope Rx 5) to just see how well frequencies up to 48kHz "null":

For perspective, let me show you what the equivalent plot/amplitude results looks like if we ran that reference recording through an MP3 320kbps LAME 3.99 CODEC and differenced it:

Obviously we can see that the MP3 version is less accurate compared to the MQA decode above. I believe for those who have tried to blind-test the sound (like what we did a few years ago), LAME MP3 encoding at 320kbps for the vast majority of folks would be "transparent" compared to lossless 16/44. Compared to the "Software MQA" decoding, the difference is a significant 15dB represented by the difference in average RMS power in the "null" file (remember, the dB scale is logarithmic).

This IMO is meaningful in terms of recognising how subtle any effect is! If this is all you remember from this blog post, it might in fact be enough :-).

2. How much difference was there between the "Reference" playback and "Hardware MQA" from the TIDAL stream?

Again, nothing but noise >60kHz, so let's just look at the stuff up to 48kHz:

You can see in the spectral frequency plot that indeed the hardware decoded output is even "less different" than the software decoding above. Likewise, there's a reduction in the RMS power calculated for this "null" file of around 6-7dB lower on average! Very impressive. That's a good sign that indeed the hardware does add to the "accuracy" of the decoding, at least up to 48kHz audio frequency.

3. How about the hybrid "Soft-Hard MQA" where the first step in the decoding is done with TIDAL software to 24/88 then the Mytek Brooklyn hardware decodes all the way to DXD?

As I mentioned above, there is an interesting decoding option which is software decoding with TIDAL first (MQA Core) then the Brooklyn takes it from there. As above, let's start with the spectral frequency plot of the "difference" with the full 24/192 recording:

Yet again, we got nothing but noise above 60kHz, so let's just focus on the difference up to 48kHz with a high quality 24/96 downsample using iZotope RX 5.

If you compared those RMS power results, the pure "Hardware MQA" decode with the Brooklyn is still marginally lower (more "accurate"). Considering that we're running the signal through a DAC/ADC step, I'd say this very small difference is likely insignificant and this "Soft-Hard MQA" decode combination is essentially identical to the "Hardware MQA" quality based on the objective results.

4. Okay... So if there's a difference between "Hardware MQA" and "Software MQA" decodes, can we compare it in the frequency domain?

Sure... Suppose we take a look at the same point in the music around 9 seconds in (there's a waveform peak in there that makes it easy for me to set my marker within milliseconds), the frequency FFT looks like this between the different recordings (this is the actual Fireface 24/192 recording, not the DiffMaker "difference" file of course):

So what do we see?

The yellow plot is the original "Reference" DXD file from 2L which I presume (but have no way of knowing) is what was fed into the MQA encoder. In green, we have the "Software MQA" TIDAL decode which we know "unfolds" up to 88kHz samplerate only, hence from ~44kHz on in the FFT, it follows the noise floor of the ADC. Of interest is that up to 43kHz or so, the software decoding seems to be the closest following the DXD original but this is just one time point and doesn't necessarily generalize across the track.

Both the violet and blue traces are the hardware decoding options using the Mytek Brooklyn's native decoder - either direct ("Hardware MQA") or going through the initial TIDAL software decode to 88kHz first ("Soft-Hard MQA"). Indeed, both tracings seem to have some "extra" content over the TIDAL software decode all the way to about 75kHz when I play the recording back and monitor in realtime. However, interestingly the ultrasonic signal is not the same level as the DXD "Reference" downloaded from 2L.

Notice that from about 60kHz up, the "Reference" signal shows quit a bit more noise than the hardware MQA decodes. This is the source for all that high-frequency noise difference on each of the comparisons (which I filter out when downsampling to 24/96).

There are a couple of possibilities about why there's all this 60+kHz noise. First perhaps the 2L DXD download is not the same source as used in the MQA encoded version. The second option is that the MQA process filters down the ultrasonic noise in the encoding or makes assumptions on what the noise floor should be; sure, there's some content way up there above 45kHz but it doesn't bear a very strong resemblance to the original DXD file. Only the folks at 2L can tell us if indeed the DXD download is what was used to produce the MQA track.

5. What is the difference between "Software MQA" and "Hardware MQA" decoding?

Obviously not much :-). The ultrasonic noise difference this time especially from 75kHz up is the result of the rising noise floor from the ADC as seen in the FFT above. So then, if I filter that stuff out in software with a low-pass filter at 60kHz, this is the difference that's left:

As expected, if you zoom in on the spectral frequency plot, you'll see a transition zone where the software decoder rolls off just above 40kHz. Everything above that presumably will be "extra" stuff the hardware decoder was able to reconstitute until of course the 60kHz low-pass limit.

Notice that the average RMS amplitude of the 24/192 "null" file is down at -90dB or so (very very soft).

6. Out of curiosity, what about comparing non-decoded MQA and DSD from the 2L download site for "Blågutten"?!

Using a similar overlay of the FFT from the same spot in the music as above, here it is with all the different encoding formats 2L has gracefully been able to provide of this music:

As you can see, the undecoded MQA drops off at 22kHz with a slightly elevated "noise" level above 20kHz (we've seen this before when I looked at MQA last year). DSD64 shows the usual high level of noise shaping ultrasonic hash above 25kHz. And likewise DSD128 does the same but one octave up from about 50kHz. Obviously, multi-bit PCM is free from these noise floor limitations - of course, we are looking at ultrasonic noise and I'll leave you to ponder if this holds any audible significance when handled properly with filters. It looks like we'll need DSD256 in order to achieve a low noise floor up to 100kHz to compete with PCM 192kHz.

7. Ok... This is all well and good with a hi-res 2L recording, but what about more typical "hi-res" recordings like popular rock albums which probably originated in analog and mastered louder with dynamic compression?

Glad you asked. I asked my friend to record Led Zeppelin's "Good Times Bad Times" (from Led Zeppelin, a DR8 track) for a comparison. Although he recorded it in 24/192, since it only decodes to 96kHz and everything above is noise, I downsampled the recordings. Remember that I examined the track a few weeks back comparing the MQA decode with 24/96 high-resolution download and found that it's very similar.

Here's the "difference" between "Hardware MQA" and "Software MQA":

Interesting. As you can see in the spectral frequency display above, the difference between hardware and software decoding is more evident with this recording than the 2L track above (item 5). Interesting isn't it that a loud, pseudo high-resolution track originally laid down on analogue tape actually can demonstrate more of a difference than a pristine hi-res recording done in DXD. Realize though that an average RMS power difference is still quite soft at below -70dB with much of this in the ultrasonic frequencies.

Here's a hint as to why there are differences:

Above is another look at the "Good Times Bad Times" comparison but in the frequency domain at the same point in the song. We can see more clearly the "difference" between software and hardware decoding in the 35-45kHz range. It looks to me like the digital filtering parameters between TIDAL and the Brooklyn firmware appear a little different resulting in earlier roll-off with the Brooklyn.

Also, we see a small amount of noise around 55kHz with the Brooklyn suggesting that when fed a 24/96 signal from TIDAL, the anti-imaging filter used is probably stronger than the upsampling algorithm for MQA decoding when fed the 24/48 MQA data. Mathematical precision is another possibility, especially the potential for intersample overload especially in the louder segments. If this is true, the louder the audio track with more samples approaching 0dBFS, the more noticeable the effect of the filtering differences will be. Finally, a third possibility is that this extra noise is due to the Mytek's "processor noise" from the decoding (I doubt this is the case).

Conclusions:

Time to wrap this up. This is what I learned in this exercise...1. Like I noted in my previous post comparing high-res downloads using the same mastering as TIDAL's MQA software decoder, I can say that MQA does "work" as claimed to reconstruct material >22/24kHz with reasonable accuracy. It uses the bits below the noise floor to reconstruct the high frequency material above the 22.05/24kHz "baseband" Nyquist frequency. Hardware decoding as explored in the track "Blågutten" indeed does have reconstituted ultrasonic frequencies beyond 44.1kHz which is the limit for TIDAL's "MQA Core" software decoder which goes up to 44.1/48kHz (corresponding to 88.2/96kHz sampling rate).

2. Although I cannot be sure if the MQA encode of "Blågutten" is based on the same DXD sample available, the reconstituted waveform above 44.1kHz from the Mytek Brooklyn does not seem to strongly correlate with the ultrasonic noise of the DXD. Looking at how the MQA technique is supposed to "fold" down, the higher octaves seem to have less bits to work with, perhaps this is just a reflection of the lossyness where each higher octave above the baseband becomes less accurate (more lossy) with the decoding.

3. When comparing TIDAL software MQA decoding with hardware decoding from the Mytek Brooklyn, the output is in fact very very similar. Sure, the Brooklyn hardware/firmware decode does seem more "accurate" than the TIDAL software decode, but I'm just not impressed that I hear a difference.

4. The Brooklyn is capable of decoding the 24/88.2 (and presumably also 96kHz) output from TIDAL's software decoder further. This tells us there is some kind of fingerprint in the software decoder's output such that the DAC can still detect that it's coming from an MQA source and proceed if needed to "decode" or "render" (forthcoming AudioQuest Dragonfly Red/Black supposedly). Other than the visual (red) indicator on the display, the final decoded output appears to be very similar to the direct 24/44 hardware/firmware decode with the Brooklyn.

Up to now, I have not discussed my subjective impressions of the various recordings in any detail. Folks, I think the Audio DiffMaker data speak for themselves! With such high levels of correlation null depths, I was simply not able to ABX differentiate the TIDAL software decoding from hardware decoding using Mytek's Brooklyn with any consistency. The technique is proprietary so we don't know what special customizations have been implemented for this DAC. Whatever it is, the effect is obviously very small and subtle to the point where a >US$1500 professional ADC operating at 24/192 is unable to capture much of a difference. In fact, when I play back one of those digital difference files (like the difference between the original DXD and TIDAL software decode in #1 above) on my ASUS Xonar Essence One through headphones with the headphone amp volume jacked up to 100%, I can barely hear the small signal above the background noise! With any normal music signal, doing this would be intolerably loud playing to my good ol' pair of Sony MDR-V6 workhorse headphones. I fail to "hear" how such low level differences (remember, the dB scale is logarithmic) will make any difference especially when real music is playing and low level subtleties become further masked.

If anything, it is actually the louder, dynamically compressed Led Zeppelin track that seems to show a larger difference between TIDAL software decode and Brooklyn hardware decode. I suspect this speaks to how the filters handle the peaks in a compressed DR8 recording (the term "Authenticated" as referring to sounding "the same as in the studio" always was meaningless IMO). Remember that a desktop PC would be much more capable than typical processors on a DAC when it comes to upsampling quality and I'm sure if MQA wanted to, the TIDAL software decoder could be very precise and better able to handle intersample overloads than the DAC firmware algorithm. I would not make any assumptions that the software decoder need be in any way inferior to a hardware implementation.

By the way, yes, I did ask my wife to sit down with me one evening in the basement soundroom to have a listen to the various MQA decodes (Raspberry Pi 3 CRAAP™ config --> TEAC UD-501 --> Emotive XSP-1 --> dual Emotiva XPA-1L monoblocks --> Paradigm Signature S8v3 speaker + SUB1)... Let's just say she lost interest after 5 minutes with a sense of indifference and we decided to watch another episode of The Young Pope :-).

Based on what I found last time and now evaluating the output from an actual high quality MQA-decoding DAC, I can commend MQA for creating an interesting compression CODEC for streaming that works to unobtrusively embed data below the noise floor and reconstructs the first unfolding to 88/96kHz "MQA Core" quite well (I do have some reservations for the octaves above this). However, I see no evidence that whatever temporal "de-blurring" is being performed is audible. This is interesting considering that I'm evaluating one of the 2L tracks here which should be able to benefit from the full capability of the MQA algorithm given that it was recorded in very high-resolution and information like the microphones and impulse response measurements should be obtainable. Furthermore, these 2L demos were much ballyhooed a year ago at CES2016 as benefiting greatly from the MQA process.

Is any of this surprising? I don't think it should be since modern DACs are extremely accurate already, and honestly, I was not holding my breath despite the usual cheerleading hype articles like this. Remember, as I expressed months ago in my article on room correction, if we truly want time-coherent sound, we must take into account the speakers and room interactions. The only way to do this is through customized measurements in your room. For years the home theater crowd have known this and DSP-based calibration systems (like Audyssey) have been built into receivers.

Here's one last demonstration/comparison. If I use Acourate to create a room correction filter (as I did here) and run the 24/192 DXD reference file through the convolution DSP using JRiver 22, what do you think the "difference" will look like when I compare the room corrected output with what I fed in?

That's what frequency and time domain correction for the sound room looks like! The effect in smoothing out frequency peaks and valleys become obvious in A/B testing. Time domain improvements in soundstaging and 3D experience of the "space" the recording takes place in are noticeable. This "difference" can be objectively demonstrated with the amplitude statistic clearly showing that the sonic impact of the DSP results in much higher RMS power in the residual file. Whatever MQA supposedly is doing is extremely minor compared to this level of correction of course.

--------------------------------

Well everyone, I think that's all I have to say about MQA (famous last words?). At the end of the day, there are really no surprises here. In fact, how could there really have been (unless one still believes in advertising hyperbole)? From start to finish this was always a mechanism of compressing "high-resolution" PCM for streaming. High-resolution was never all that audible to begin with so we can't expect the partially-lossy-compression technique to sound much different. In fact, we should be very suspicious if it did sound remarkably different from the high-res source it's derived from! As for the "de-blurring", who knows what they were referring to. Maybe it's just about their minimum-phase upsampling (Yippie... Remember the results of this blind test?) or maybe there really is some background DSP going on during encoding utilizing ADC/DAC impulse responses. In any event, I'm not seeing (or hearing) much impact. Sure, one could criticize that I'm testing based on recordings made by the RME Fireface and that somehow the MQA benefits have been lost going through that ADC. If this is the case, I would submit that the difference would truly be insignificant given the quality of this recording device!

Considering the little difference Mytek's Brooklyn hardware decoding made, I certainly would be in no urgency to upgrade my DACs specifically for MQA. In fact, I'm still of the opinion that software decoding is the way to go as discussed previously. This is of course in no way a reflection of the impressive recordings my friend made of the Brooklyn DAC; clearly a very accurate device allowing me to compare the files with such deep correlated null depths using the excellent RME Fireface ADC!

Lately, I've heard that the spectre of DRM has resurfaced. A couple years ago, when I first wrote about MQA, I did wonder about "copy protection" including the possibility that the word "authenticated" is more about security than sound quality. Well, it seems that the good folks poking around in the MQA software decoder have found that the software is capable of decoding various forms of "scrambling". Although we have not seen severe sonic degradation thus far; that is, the MQA files I've come across so far sound pretty good and are close to CD resolution without an MQA decoder, future files may sound worse by design when played back on a standard non-MQA DAC.

Just to be clear, I do not want to sound hysterical or come across as alarmist; if anything I'm personally rather indifferent about MQA these days and writing on it since it's the topic du jour. I'm not saying the record labels or MQA want to or would do this. It's just that they could. Sure, one would still be free to back-up the music files perfectly (some folks seem to think that this in itself makes MQA not a DRM scheme), but one would still need to use MQA-capable hardware or software decoding for high fidelity (especially if the MQA scrambling purposely makes the undecoded sound unacceptably poor), hence the freedom which we enjoy now with "flat" high-res files would be constrained.

Bottom line for me: so long as there's standard PCM around for the music I enjoy, to this point I personally have no need nor am concerned about MQA based on my listening and objective comparisons. Sure, if you think high-res streaming is important, then it has its niche with TIDAL as intended.

Thanks again to my friend who provided the "virtual" use of his Mytek Brooklyn DAC, RME Fireface ADC, and his time to make these meticulous recordings!

Have a great week ahead everyone... Over the years, I've really enjoyed Arvo Pärt's compositions. This past week, I've been listening to Adam's Lament (2012). Another beautifully deep, moving, and spiritual choral and orchestral experience.

As usual, I hope you're all enjoying the music :-). I would of course love to know your thoughts on the MQA "sound" if you've taken time to do some controlled comparisons...

Addendum: I just noticed a statement on the MQA website I linked to in the text above. They still claim that an MQA file when played back undecoded sounds "superior":

"Widely agreed by mastering communities to be superior to CD"? That's actually news to me based on discussions I've had "off the record"...

- No decoder? You don’t need a decoder to enjoy our ‘standard’ sound quality – which is now widely agreed by mastering communities to be superior to CD.

Also check out the mumbo-jumbo rather than proper scientific reference for "MQA draws on recent research in auditory neuroscience, digital coding..."

As for Bob Ludwig's testimony:

No. Nyquist-Shannon theorem did not turn on its head (although they may be rolling in their graves)... Bob Stuart just didn't explain to Mr. Ludwig how it worked properly I presume; not exactly "mind blowing" stuff here when you take some time to think about this. "Exact analogue", eh? What does "exact" mean again?

Oh yeah, let me remind everyone that minimum phase filtering with no "very unnatural" pre-ringing has been heard probably by everyone at some point for years; this remarkable music playback device is called the iPhone (and the iPad).

Referring to The Absolute Sound magazine!!! Bob L., please say it isn't so! An MQA ad just had to end off with that "revolution" word again of course :-).

This is exactly the sort of test I've been looking for; i.e., a comparison of the analog output of the various encoding / decoding methods. Thank you so much for continuing to share your analyses.

ReplyDeleteA pleasure jhwalker.

DeleteI think it's good to examine what we can and put up some facts rather than just opinions and testimony :-). Especially important when companies these days seem to be rather opaque even when they claim to be open! Furthermore, one wonders - where in the world are the audiophile magazines? Shouldn't *they* be doing a little investigative journalism?

A very well considered and thoughtful analysis, Archimago. Highlighting the minimal differences between the considered MQA cases, as compared to corrections for the "acoustics of the room" was especially telling. Excellent work!

ReplyDeleteThanks for the note bb.

DeleteI find it rather disingenuous when statements are made about how MQA delivers the sound "end to end". There are 2 implications here. First, if this is true, then it implies that a Dragonfly Black once firmware released ($100) can deliver the "exact" same analogue output as a Mytek Brooklyn ($2000). After all, playback with appropriate MQA firmware should be "authenticated", right? Sounds exactly like in the studio? Hmmm, really?

Second, clearly "end to end" cannot imply the sound coming to one's ears. The most important determinants of sound and contributing to distortion on the order of magnitudes in the form of amp/speaker/room imperfections have been ignored in this claim.

I really hope this is obvious to everyone out there - including the audiophile press!

The pace of truly interesting developments in the audiophile world is very slow, so we get Roon one year, MQA the next year, etc. Media just doesn't have enough to talk about so things get exaggerated and out of hand when they do latch onto something.

DeleteIf something like 3D virtual reality audio becomes a hot topic, there will be a stronger focus on the acoustics of the room since this is what the head-related transfer functions (HRTF's) will be based on.

Yes, a good point BB.

DeleteWith mature technologies like audio, there really isn't that much to talk about month to month. And "advancements", if we can even call it that especially in the hardware world requires that extra "spin" to make it more sexy and exciting...

The ultimate spin adjective of course is "revolutionary" :-).

Maybe there will be some revolutionary technology on the horizon. Something like a "neural interface" perhaps for VR. But that's a ways off...

Excellent article but I have one query. On Sound on Sound they say "MQA claim that the total impulse-response duration is reduced to about 50µs (from around 500µs for a standard 24/192 system), and that the leading-edge uncertainty of transients comes down to just 4µs (from roughly 250µs in a 24/192 system)." In that case wouldn't you need an ADC with higher resolution than the RME Fireface 802 in order to see any real differences between the Reference and Hardware MQA decode?

ReplyDeleteDammit CBee.

ReplyDeleteI think you've made me write another blog post about MQA...

sorry, lol!

ReplyDeleteThe entire time I was watching (and listening) during that video, I could smell something really strong around me.

ReplyDeleteAmazingly, as soon as the video finished, the smell magically went away...

I really have no idea what it was.

Hey Tony,

DeleteThat's a rather common stench in audiophile advertising unfortunately.

I think also just as unfortunate is that many audiophiles and those who write for the press seem to have become "anosmic" to this rather unpleasant circumstance.

"anosmic" - I had to look that one up haha.

DeleteOff topic: - you seem to make extensive use of RMAA, however I have had loads of issues with it (it keeps on getting confused when I select ASIO drivers amongst other things!).

Are you running the PRO version?

I was tempted to purchase a PRO licence, however developement seems to have ceased a couple of years back.

I also note that 'NwAvGuy' (who has also disappeared!) doesn't rate it either.

After much searching, I found this RMAA alternative (which may interest you) http://www.ymec.com/hp/signal2/ra2.htm

It would be great if you do a detailed write up on your test setup - I did search through the blog but couldn't find anything.

Thanks and keep up the good work!

Right Tony,

DeleteIndeed RightMark has its quirks and can be finnicky with hardware. Before last year, sometimes running tests on my old E-MU 0404USB was frustrating with the drivers as on occasion they would crash, sometimes very annoyingly in the middle of a measurement. These days it's much better with the Focusrite Forte hardware and drivers.

I do have the PRO version of RMAA. However, I would not say that it works much better so unless they start the development process again and fix some bugs (like the messed up 192kHz graph drawings), the free edition does the job.

I have reviewed the post from NwAvGuy back in 2011. Yes, RMAA is not an all-encompassing audio measurement suite. It is not meant to be used for amplifier measurements. It's missing some things, and one of course has to be careful to make sure the setup is done correctly; as he said "SETUP IS EVERYTHING". This is why I typically document my measurement chain for transparency.

Over the years, I've gotten to know the software pretty well and these days, have an old Windows 10 Ultrabook that I devote to run these measurements with. Notice that over time, I've expanded the measurements with a digital oscilloscope to look at absolute voltage levels, I measure impulse responses separately, jitter J-Test also done separately. Lately, it has been interesting evaluating digital filters with the "Digital Filter Composite" graphs based on discussions with Juergen Reis and looking at Stereophile measurements... Again, this is outside of what RMAA provides. It's convenient software to get the "basics" - frequency response, dynamic range, THD and IMD estimates, crosstalk.

The software is also great for overlaying comparisons of different hardware; something I rarely see the magazines publish. Gee, I wonder why?

Thanks for the link, I'll check it out. Looks like it could be used for amplifier THD measurements mainly.

BTW: I wonder what happened to NwAvGuy. His writings are a staple for objective audiophiles. We certainly need more of that from the engineer's perspective!

DeleteYes, I too have wondered - his blog was always an interesting read (as is yours).

DeleteRegarding RMAA - I have found it is far more stable on Windows 7 (I was using Windows 8.1) - in fact the app appears to behave quite differently under 7 with regard to timings and so on.

(I deleted my previous comment - too many spelling errors!)

Thank you.

ReplyDeleteHi Archimago,

ReplyDeleteWouldn't it be interesting to measure and show the impulse response behavior of a DAC before and after MQA certification? I also have the impression that a certified DAC has improved impulse response behavior when playing non-MQA files.

Don't know if this is the case. I can imagine that a DAC might only change the filter parameters when playing MQA-encoded files. Otherwise, it would just stick with the standard filters pre-programmed on the device.

DeleteAfter reading many MQA publications and watching recent video interviews, I have a strong impression that the calibration and certification of an MQA DAC is all about it's impulse response behavior. This is fed and measured end-to-end and what is most interesting is that the form of such an MQA time-compensated impulse response does not have a symmetrical form! This is part of their long-term research and patents I suppose. It is a necessary compensation which enables the time compenstation. Problem is that no one is able to measure this without having an MQA encoder. So all thoughts and measurements until now are not much more than speculations...

DeleteHello Pedro.

DeleteWe'll see about that! Although we don't have access to an encoder, we can still "interrogate" an MQA Decoder like the Dragonfly Black and get a sense of the filters being used.

You'll see when I publish the post this weekend with the Dragonfly Black DAC...

Here's another view on MQA - the author of the article seems to know what he's talking about, but I'm no judge of that.

ReplyDeletehttp://www.audiomisc.co.uk/MQA/origami/ThereAndBack.html

Thanks for the link Tony.

DeleteInteresting the article is looking at how the origami process might work based on the patent here:

https://www.google.com/patents/WO2014108677A1?cl=en

Ultimately we're looking at a process that isn't fully lossless and leaves aliasing effects. And if the analysis is correct, looks like there's some compromised that needs to be taken into account in the encoding process. Of course, nobody can be sure without access to the actual MQA encoder...

No matter, if you do not have cash, use cryptocurrency to buy top up phone with bitcoin , amazing and easy way to recharge mobile online international sim card number.

ReplyDeleteOne of the top website for organic SEO, where you can easily Drive More Traffic To Website within a month. Yes, it's time to increase your clients/visitors on your website and earn more and more money from your website or business. Visit for more details.

ReplyDeletedrive more traffic to website