Hey guys and gals. For this blog post, I thought it would be of particular interest to some to have a look at latency and audio interfaces. As you can see in the picture above, I pulled out my Focusrite Forte for this alongside the RME ADI-2 Pro FS.

Latency is simply the delay for something to happen after an instruction has been issued for that event. Computers, device drivers, the hardware are not instructed "real-time" down to a bit by bit timeframe, but rather act on "chunks" of data generally issued with some buffer to keep the pipeline flowing. The buffering mechanism is a major, but not the whole, factor in the latency effect.

There is a balance to be struck though. The larger the buffer, potentially the more latency, but the less likely we could run into issues with dropouts. The shorter the buffer, the lower the potential latency, but the more demand on the CPU to deal with making sure the buffer doesn't go empty, and likelihood that we could have audio errors if needs are not met on time. This idea of "strain" on the CPU is particularly relevant on digital audio workstations (DAWs) when all kinds of DSP like VST plugins are used in audio production.

For us audiophiles, we generally don't care too much about latency because normally we're just interested in the sound quality once we "press play" and so long as the audio starts reasonably quickly and is not disrupted during playback, then there's nothing to complain about. Latency by itself has no effect on sound quality (despite the claims of some, which we'll address later).

Although we may not be disturbed by longer latency (like say >50ms) most of the time in a home audio system, that doesn't mean we might not notice when trying to synchronize multiple events. For example, in a home theater, lip-sync issues would be a side effect of high latency. In the pro audio world however, latency is of importance if one is doing time-sensitive monitoring and recording. You can read more about these in articles like this from Sound-On-Sound back in 2005.

Although there is a range of tolerance depending on what one is doing, from what I've seen, it would be good to have latency of <15ms in DAW applications. For even more critical situations (like percussion work for example), <5ms latency would be even better. Generally, when it comes to consumers and home theater applications like lip-sync, depending on the person, we typically can tolerate even more desynchronization: +45 to -125ms threshold, and +90 to -185ms tolerable range based on the ITU-R BT.1359-1 standard.

(Here's an interesting article discussing latency in the days of COVID-19 and remote jamming.)

As with most topics, there are many nuances to go into if we desire, and idiosyncrasies to be mindful of depending on the systems we have. Both hardware and software have roles to play in terms of how much buffering is needed and thus determine the ultimate latency.

For this blog post, let's try out an interesting piece of measurement software - Oblique Audio's RTL Utility - version 1.0.0 released recently on October 2, 2021. It's written with multiplatform JUCE and you can see versions for MacOS X and Windows (32 and 64-bit). See the User Guide for more information.

I was curious to know what latency looked like with the different audio drivers available on Windows. In particular, what happens when we use native ASIO compared to implementations like ASIO4All or FlexASIO. And also Windows' own WDM/Kernel Streaming or DirectSound for playback.

Now before we get to results, let's define the system:

As you can see, I'm using my rather pedestrian "measurements" computer for this, the Intel NUC 6i5SYH, a machine I put together in 2016 with the low-power Intel i5-6260U CPU, Samsung 850 EVO SATA-III SSD, and 16GB DDR4 RAM. I'll be using 64-bit Windows 10 (current version 21H2) connected to the RME ADI-2 Pro FS audio interface above with XLR loopback. Likewise, I have the Focusrite Forte loopback with yellow TRS-to-XLR cables. The analogue cables are either 3' or 6' in length; you'll need very long cables to measure a latency difference given the speed of electrical conduction!

Let's start with the RME and see what we find. Here's the result from RTL Utility at 44.1kHz with a small, but reasonable, 64-sample ASIO buffer. ADC and DAC filters are set at linear phase "Sharp":

As you can see, the measured round-trip latency (RTL) is a little less than 5.3ms (232 samples) as highlighted in yellow. The ASIO device driver reported to the software a latency value of 193 samples which is reasonably close to what was measured.

We can change the buffer settings in the RME control panel if we want, but suppose I leave it alone and just stay with the "base 64-sample buffer" setting, as we stepwise increase samplerate from 44.1 to 768kHz (the "Next sample rate" button), the RME driver will increase buffer size by 2x with each major doubling of samplerate (ie. 64-sample buffer at 44.1/48kHz, doubling to 128-samples for 88.2/96kHz). Note that not all drivers will behave like this.

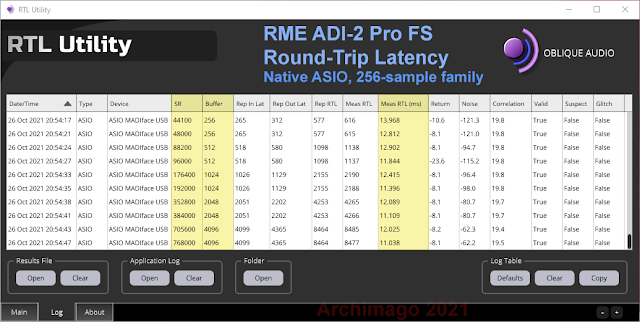

We can use the "Log" display at the bottom panel for a summary table with details including reported in/out latencies (note that there are both playback as well as incoming buffers):

|

| I highlighted the samplerate, buffer sample size, and measured RTL in milliseconds for convenience. |

Notice that we can compare the "Rep(orted) RTL" with the "Meas(ured) RTL" to check whether there's good concordance; if the driver/device is "honest" about the performance. If there is a large discrepancy, the software will flag the result as "Suspect". For the RME, it looks like all measurements were valid, nothing suspicious, and the software did not detect any "glitch" in what was sent out from the DAC and received back through the ADC.

The "Return" value is in dB and reflects the return signal amplitude. Andrew Jerrim, the software author tells me that RTL Utility, within its test signal, incorporates 12 peaks which are averaged and this is the level reported in this column. As for "Correlation", this is a value of the "cross-correlation" between the intended and return signals. A factor of 40 is high but it can be lower and the program will still pick up and calculate the time delta (latency).

Now, suppose we're doing stuff like extensive DSP processing on the computer and we need to have more buffer size. So instead of the 64-sample buffer family, let's run the test with the 256-sample "family" of buffers starting at 44.1kHz (this is adjusted in the RME ASIO control panel, not shown):

That jump from 64 to 256-sample buffer size is a 4x increase. Notice however that the measured RTL increased 2.7x at 44.1kHz, 3.1x at 96kHz, and 3.6x at 768kHz. I believe these results will vary depending on the audio interface you're using, maybe even the computer hardware.

We can compare the results then with the Focusrite Forte USB audio interface. Here's a screenshot of the Forte's control panel - an example of where you adjust the buffer settings with each ASIO device. On the right is the RTL measurement at 44.1kHz / 64-sample buffer that correlates with the same setting as the RME ADI-2 Pro FS above:

Notice that with the Focusrite Forte, RTL is significantly higher than with the RME. This is an example of the measured (and reported) variations we see between different hardware and drivers. We can then have a peek at the RTL using various samplerates supported by the Focusrite Forte from 44.1 to 192kHz; let's set the buffer sizes to the same as the RME for the table comparison:

This latency difference between audio interfaces could be one of many variables that can differentiate the quality/performance of the device and might be one of the factors that correlate with price (the RME is more expensive than the Focusrite).

For low-latency work, I don't think it's any surprise that one should stick with the native ASIO driver provided by the manufacturer. After all, ASIO (Audio Stream Input/Output) was specifically designed for this purpose of allowing professional-level, optimized low-latency, direct-to-hardware work to be done on Windows audio workstations. This then bypasses the usual OS audio path which has the job of mixing together all the various and sundry audio streams in general computing.

There are times though when we might be "forced" to mix-and-match devices and software. For these situations, we often need to use "wrappers" like ASIO4All or as discussed a couple weeks back, FlexASIO. Going back to the RME ADI-2 Pro FS, what happens if we measure latency with ASIO4All instead of the native RME ASIO driver?

That's what happens ;-). Notice that instead of the native RME driver latency of 5.3ms, it's increased to 15.8ms with ASIO4All, about 300% jump in value. ASIO4All converts the ASIO data into WDM/Kernel Streaming output in Windows.

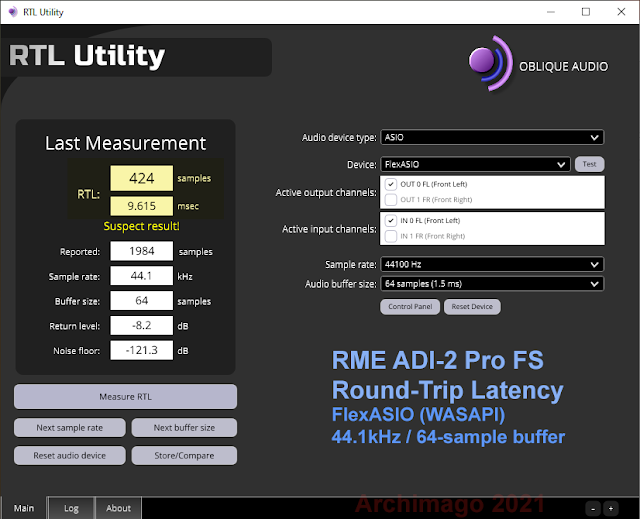

Similarly, we can have a look at FlexASIO (WASAPI out):

In this case, we see that the latency is 9.6ms or about 2x that of the native RME ASIO driver. Note that getting FlexASIO working was a bit of a pain... Sometimes it works, sometimes it crashes, sometimes I need to use REW to change settings before it worked. There's some refinement to be done with FlexASIO including automatic samplerate change; currently I have to go into the Windows Settings to adjust this.

We can also ask RTL Utility to not use ASIO and instead let's try the Windows Media or DirectSound interfaces like this:

Notice that I cannot use a small 64-sample buffer with either "Windows Audio" or "DirectSound" directly. Below the buffer values of 132 or 1023 samples as above, the software will either not work or "glitch". While Windows Audio (I assume this is WDM) isn't too bad at <15ms (very similar to ASIO4All), DirectSound's result of 122ms is obviously high!

I was curious... Instead of a loopback to the same device, what's the latency if I connect:

computer --> RME ADI-2 Pro FS output --> 6' XLR cable --> E1DA Cosmos ADC --> computer

As you can imagine, this chaining of various devices with different drivers, using ASIO4All to patch them together must exacerbate latency!

Indeed, at best I could get was about 50ms latency for this kind of "chaining" of devices with a 176-sample buffer. Setting the buffer any smaller would result in RTL Utility / ASIO4All crashing.

Thankfully, when we're doing hardware measurements as I have done on this blog, latency isn't a problem so I would happily set the buffer to 512-samples or more sending the DAC output to the Cosmos ADC.

One last thing about this software, there's enough resolution here to even differentiate small latency differences between the digital oversampling filter settings! For example, the RME ADI-2 Pro FS can switch between different ADC and DAC filters and we can see the relative changes:

Notice that the reported in/out numbers are the same. But we can see the subtle variation in the actual measured values in fractions of a millisecond.

As you can see, the "SD" (Short Delay), minimum phase filters (see ADI-2 Pro FS filter discussion here) provide the lowest latency results. For the DAC, the "NOS" mode provides the lowest latency of all since there's no FIR filtering performed (as usual, don't get too excited about the NOS sound, the lower latency is not a marker of sound improvement).

In Summary...

I think RTL Utility is a pretty cool tool to try out and appears to be very accurate in how well it can measure differences - even down to the level of small changes due to switching the oversampling filter!

For folks who do (home) studio work, this is a great way to optimize settings and confirm latency results from audio cards and external interfaces like the USB boxes. Using the tool, one can confirm that the reported latency is consistent with actual performance (with the device's native ASIO driver preferred of course).

While I did not try it, the User Guide does mention the ability to measure an "acoustically coupled" set-up between speaker to microphone. Obviously, distance between speaker and microphone will add to the time difference as well (3.43ms/m distance for speed of sound).

If you find latency testing to be of interest, check out the DAWbench website for more on evaluation of audio workstation hardware/software.

Now for the audiophiles, as I mentioned right at the start, we don't need to worry about latency with high quality music playback. We can anticipate that latency increases if we apply filtering. For example, the 1M-tap Chord Hugo M-Scaler has a "video mode" which reduces filter delay if needed. Likewise, if we apply FIR DSP room correction, expect further delay when we press the "play" button for the convolution process. Some programs, like JRiver are capable of compensating for DSP latency, so look for that if you're doing video/audio playback.

[Time permitting, I'm curious how RTL Utility might measure the effect of DSP delay; for example, using Mitch Barnett's HLConvolver in a loop-back with "virtual cables". Stuff to try out another time maybe.]

--------------------

Over the years I've heard "computer audiophiles" hold beliefs that lower latency, low buffer playback settings result in "better" sound. You see beliefs such as this for example on the JPLAY forum, or here a number of years back.

Smaller buffers mean that the CPU must intervene more frequently to feed the DAC which increases processor load. This is counterintuitive to other recommendations made in audio forums! Typically, the idea is to reduce CPU/component activity to improve sound quality, ostensibly to reduce potential electrical noise. Suggestions such as pre-loading an audio file into RAM first to reduce hard drive activity, slimming down the OS, and shutting down unused processes in the interest of reducing CPU load at least sound reasonable!

But how is reducing the buffer and asking the CPU to intervene more frequently helpful? As usual, these kinds of discussions never seem to include any measurements to demonstrate that what was recommended actually improves the sound - much less even makes a difference!

It's due to these kinds of apparent neurotic beliefs in the absence of evidence that allows audiophilia to be infected by a "den of robbers" with their questionable offerings whether it's software potions (JPLAY?) or hardware trinkets (JCAT?). I trust most of us are weary of this stuff by now, and simply avoid...

--------------------

Speaking of potions and trinkets, let's talk about PLACEBO. The reason I'm bringing this up has to do with the John Darko + Michael Lavorgna podcast put up the other day; a reader mentioning this to me in one of our discussions. The segment in question started around 26 minutes. Good on ya "Pete" for bringing up the issue of the "placebo effect" and subjective reviews from your buddy Mr. Darko.

As you know, the placebo effect is a psychological response where an intervention is reported as being beneficial when in fact the treatment, pill, product, or device has no actual intended physiological mechanism to result in such supposed benefits. This of course does not mean that with deep enough testing, one cannot see physiological brain changes. For example, a classic would be neuroimaging studies of placebo causing dopamine release in the striatum of the Parkinson's Disease patient. Also, just because a person might testify that they "feel good" due to some treatment doesn't mean ultimately there are any meaning benefits other than that emotional change (from example, unorthodox cancer treatments don't improve survival - consider the cases of advocates, conspiracy theorists, and something like Laetrile/amygdalin).

Darko's main argument is that if he "hears" an improvement (with controversial "Product X"), and another magazine reviewer "hears" something, and the manufacturer also supposedly "hears" a positive difference, and so on and so forth, then maybe it's not placebo because multiple people all claim the same thing. That doesn't really carry much weight because there are multiple examples where many people have false beliefs and claims. Various religions, cults, psychics, and swindlers have over the course of human history led people to believe things that are not true! Some (like almost 1000 believers in Jonestown) even were willing to sacrifice their lives for such madness as an extreme example - be careful of "drinking the Kool-Aid" (a reference to this event) guys and gals.

Of course nobody wants to believe that they can be affected significantly by subconscious processes, right? Least of all anyone who sees himself as a "professional" reviewer who believes he has adequate hearing acuity, experience, knowledge, or wisdom to declare whether a product is good or not to audiophiles at large.

Yes, of course the Stereophile writer could be wrong (31:10) regardless of how "brilliant" (creative? imaginative?) of a writer he is. Darko could be wrong. And Lavorgna could be wrong. Is their testimony in any way special admissible evidence beyond simply "he said..."?

Notice how Darko doesn't just come out and tell us what "Product X" is. What are we talking about? An expensive USB cable perhaps? Non-useful stuff like the AudioQuest JitterBug? Why don't you just come out and confront the topic head-on so audiophiles can consider for themselves what this nebulous device is you're defending? I'm going to guess that he's being protective of the company. And that, I believe is also the unspoken (not likely unconscious) positive bias towards the Industry which is beyond the subconscious placebo effect. As far as I can tell, Darko is a salesman, not a truly independent reviewer. Therefore, one should certainly be selective with his comments (48:15) as to which ones make sense and which are harder to believe.

You see, placebo is not something we "suffer from" (35:50). It's just an example of the kinds of biases we all experience just by being human. We are not immune from biases by nature of our being "subjective" creatures with emotions, who value social contact, judge ourselves in the company of others, and ultimately each limited in our cognitive and perceptual abilities. Relationships with people we respect will color our impressions. To be granted a "loan" of some expensive gear so we can make a video review and monetize content imparts a favour. To achieve the status of an "influencer" by being associated with other people or companies we esteem will make us feel good. With all these potential effects, is it not surprising that within a certain "type" of reviewer group (those who are purely subjective), there tends to be much more credence given to products which are controversial?

Conscious or not, these effects are powerful and must not be discounted. To suggest that biases (including placebo effect) are things that happen to "other people" and not to ourselves is simply lacking in insight and also frankly dishonest.

As such, Darko can't claim that audiophiles only believe the placebo effect applies just to controversial products like "Product X"! Yes John, it does "infect" all your reviews (48:30) - amps, DACs, speakers, etc... In different ways biases are just part of the subjective reviewing process. The job is to minimize these biases so as not to misrepresent products and their true value. Rational audiophiles don't typically say that speakers are "placebo" because we appreciate that speakers actually do sound different to an obvious extent. This is easily demonstrated objectively as well! But that doesn't mean there are no biases at work - designs from Zu or DeVORE often seem to be hyped up within the media even when objective tests point to significant anomalies.

A major difference between speakers, amps, or even DACs compared with controversial items like "Product X" is that typically such devices are egregious insults against how the science works as applied to consumer electronics products that are the fruit of scientific understanding and engineered applications of these principles.

This is why I think product reviews, where possible, should contain both objective and subjective assessments. Objectivity anchors the reviewer to a frame of reference, allows comparisons to be made, encourages him/her to demonstrate claimed benefits of a product and challenges the reviewer to think about the veracity of a company's declarations. Such a reviewer would still be free to claim he/she "hears" a difference but at least psychologically and intellectually will also be tasked to offer explanations for the dissociation between test results and claimed experience when this happens.

There is another health-related psychological effect we might want to borrow from the medical lexicon when thinking about audiophilia. "NOCEBO" - as per the Oxford Dictionary:

A detrimental effect on health produced by psychological or psychosomatic factors such as negative expectations of treatment or prognosis.

We can see an analogue of this within the audiophile world when we look at the brands of products represented in the pages of Stereophile, TAS, Hi-Fi+, etc. Why is it for example that they never review something like the Topping D90SE (which we looked at recently)? I'm sure many audiophiles would be interested in knowing more given the affordability and reputed performance of a device like this. Is this a subconscious or conscious omission? Are brands like Topping or SMSL not expensive enough or have adequate advertising in the pages of Stereophile so as not even to be worth a mention? How likely do you think a reviewer in these magazines might use subjective words like "harsh", "unrefined", "bright", "flat", "lacking in dynamics", "dull", "ear-piercing", "clinical", "bass shy", "too hi-fi", each holding negative connotations, as an expression of inherent negative expectations even when there is no verifiable rationale when measured?

If in the unlikely situation that Stereophile were to hold a shoot-out between DACs, do you think a US$900 Topping DAC would ever be declared the "winner" compared to the best of dcs, Chord, EMM, HoloAudio, PS Audio, T+A, totaldac, AudioNote, etc. (whatever their "Class A/A+" might be at the time)? If we were to hold blinded listening sessions with trained listeners and an impartial investigator, how confident are we that the expensive products would perform well based on perceived fidelity or at the very least hold some correlation with the price structure?

So, just as there are all kinds of positive "placebo" biases, audiophiles should be mindful to watch for negative "nocebo" as well. "Cheap Chi-Fi" could be a phrase some might use as an example of preconceived notions about a class of audio products which have nothing to do with actual sonic performance.

Anyways, good job "Placebo Pete" for raising obvious issues that some "professional" subjective-only audiophiles seem to have a problem with acknowledging. And apparently want to suppress concerns that are quite obvious to the educated public.

A couple of final thoughts on this:

1. We do not need direct experience (38:40) with something to believe, or even know it's BS. Do we need to try out psychic healing to know that it is ineffective for appendicitis? Must I send money to Ted Denney first before I should doubt his nonsense (see here, here for actual experience as well)? Of course not. The wise person would recognize that the burden of proof for fantastic claims must be the responsibility of the company/person making such statements. This doesn't mean we can't test products ourselves, but if it's going to cost $$$ or waste time, then by default, skepticism is warranted. To not use our critical thinking abilities in the 21st Century nor demand evidence before handing over a credit card number is to entertain scams and reward snake oil when resources could be rewarding reputable companies.

2. Of course there are placebo effects in the auditory domain (47:05)! Lavorgna, as usual, is a poor conversationalist who adds little actual knowledge and is out of his depth. He's wrong - here's a study on the placebo effect and hearing aids (2013). People do report "hearing" differences. By just describing a hearing aid as being of a "new" design and a yellow color, this changed speech-to-noise ratings and subjective sentiment shifted to this device which was in fact the same as the "conventional", beige one. The conclusion is that blinded methodology is needed when testing hearing aids, just as is necessary in hi-fi audio as well. That the audiophile magazines and Industry fight against blind test methodology is plainly disingenuous. There should be no controversy about the importance of blind testing to determine significant audible differences at this point in history (even J. Gordon Holt knew the importance of "honesty controls").

--------------------

To end, for the digital photographers, I got one of these a few weeks back:

That's the Epson EcoTank ET-8550 13" (A3+ paper) printer intended for photos although it also can do scanning (no FAXing, not an all-in-one). This thing is seriously good - an "early Christmas present" for myself considering how low the stock is for a lot of the tech toys this year around here in Vancouver!

Anyhow, I'll talk more about this next week I think so I diversify this blog a little ;-).

For readers of the blog, I think it's obvious over the years that I'm very much a mainstream pop guy so I've been streaming the new Ed Sheeran = (DR5, 2021) for some contemporary tunes this past week. Ed has gotten more sappy over the years after getting married and being a dad - pretty normal. Catchy tunes nonetheless.

Also, '70s pop/disco is back, and so are Agnetha, Björn, Benny, and Anni-Frid - aka ABBA. Voyage (DR8, 2021) was released this week and I find it alright sonically, layered with a heavy dose of nostalgia. The return theme "I Still Have Faith In You" is a bit over done. I think "Just A Notion" is catchy. I will be curious to see how the album does in sales and also that virtual tour where the performance appears to be motion-captured computer-generated un-aged avatars.

Have a great week ahead guys and gals. Remembrance Day for Commonwealth countries next week.

Hope you're enjoying the music...

Hi-

ReplyDeleteMore audio placebo type effect: the "McGurk Effect" shows how totally expectations determine what we hear (look it up on Youtube).

Aside: I think I read at AS that Kal Rubinson is publishing soon a review of the topping DAC for Stereophile....

Thanks Unknown,

DeleteInteresting to see how Stereophile presents the Topping. What will be most interesting would be what's implied or read between the lines. Also, how comparisons might be made (and which devices!).

Lots of good info in the latency arena. Not an area that I've put much thought into but good to hear about it.

ReplyDeleteI hadn't heard the term, "Nocebo", before this article. Seems to fit a lot of scenarios in the subjective review world. I just received a Khadas tone board, version 1, that I picked up for $79 to replace a HiFiBerry DAC that's gone faulty in my headphone system. I put it in my main system and could be quite happy with it as my primary DAC. For me it feels a bit weird that such a low cost device is audibly just as good at DAC duties as the Chord and RME DACs that I also have.

Seems that it would be pretty easy to argue that a low cost device can't be as good as an expensive one. Absent expert knowledge and facts, humans tend to equate price with value. Come on, a $79 DAC as good as a >$1,200 DAC? Can't be; right? A subjective reviewer isn't relying on facts or expert knowledge at all. Once a device gets to an objectively transparent level then those subjective differentiators are just a matter of fashion without audible substance and really should be talked about as such.

Hi Doug,

DeleteYeah for sure! There are many things in this world that comes across unexpected, challenges our biases/assumptions, but just the same true...

For the longest time, everybody "knew" that gravity affected a lighter object less than a heavier object, thus different acceleration until Galileo proved acceleration was the same (barring drag/air resistance of course). Some people still apparently can't believe the Earth is spherical.

I think the "stupendous error" of audiophilia with pure subjective reviewers is to frame everything as "changing the sound" - regardless of the magnitude or limits of hearing. The idea that "everything" makes a difference in sound quality whether it's a 6' length of power cable (ignoring the miles of commercial wire that came before), or a 20mm fuse, typically ends up at the heart of audiophile arguments even when there is absolutely no evidence for such things.

And if everything makes a difference, then potentially a $500 fuse is better than a $1.00 Bussmann... Of course. ;-)

A great example of this nonsense was the many audiophile articles extoling the obvious differences between the Focal Clear Mg and Focal Clear Mg Pro headphones. Differences which conveniently went along the expected themes (the regular were more "musical" "fun", the pro more "analytical" "clinical"). Eventually some enterprising person actually bothered to ask Focal what the difference was and they answered the color and the cables it was packaged with. Otherwise identical headphones. Apparently the snake oil industry is too up it's own ass to even be bothered to take down a lot of these articles now that they look so foolish.

ReplyDeleteWow Isaac,

DeleteHilarious man. Sometimes I don't know whether to laugh or cry about such things. Typically, I'll LOL because there's enough tragedy in this world and we just have to approach human psychology with humor. (I try not to get into forum battles especially.)

As with basically all technology, I think once we've reached levels of quality encroaching on "good enough" for human needs or limits of perception, the subjective sentiment shifts toward imaginary biases. I think this is happening throughout consumer electronics. Smartphones are good enough so funky colors are key to improving perception and sales with each generation. Computers are fast enough so it's about the look/size/branding now...

Audio IMO was the harbinger to such things as companies market towards esthetics rather than technical abilities of many of our devices now. Headphones have been there already over the last decade with sales based on names like "Beats" not too long ago, rather than actual sound quality even.

And so it goes I guess. It's fun watching how things happen...

Remember the research from a couple of years ago that perceived loudness changed according to the color of the volume knob (red was highest) - when actually there was no change in volume.

ReplyDeleteThat's pretty cool Unknown,

DeleteIf you have a link to that research that would be great! I can imagine audiophile magazines not wanting to talk about research like this.

If I were an amp manufacturer, I would immediately apply the science and plan for the next generation of products to have the volume knob go up to "11" and colored Ferrari Red. Maybe make my "entry level" amp go to "10" and Ferrari Yellow knob.

https://mediatum.ub.tum.de/doc/1082448/file.pdf

DeleteGreat stuff ;-)

DeleteIndeed March.

ReplyDeleteI find it even more comical when an example is the manufacturer themselves! Yeah, no bias there ;-).

Enjoyed reading your blog! I have learned it the hard way and realized that paraphernalia such as Audioquest Jitterbug create more problems than they solve. That iFi AC purifier sits there and consumes power (it runs warm) than performing "purification". That a sub $100 product can make huge positive impact to a hifi system than products which costs 5x or 10x times. That "value for money" software for others may not be VFM for me - search around and you find excellent, fully free software with comparable features. And then there are those that measure good but are frustrating usability wise. All a result of going by numbers - not the measurements mind you, number of followers of influencers and the number of people who gave a 5 star rating for a product. :)

ReplyDeleteYeah Unknown,

DeleteIn general, I find that we have to be careful with what to believe on the internet these days. The signal-to-noise is very poor if we go to most review sites online when there's no attempt or desire to run measurements to show beliefs in "noise" or "jitter" in particular when we talk about these "purifier" devices. We can't trust "customer" reviews either because I bet many of the reviews on places like Amazon are also tainted with bots and stuff from the advertising department.

Indeed, we need to be mindful as you noted that products might even create more problems than they solve (or just waste money). The vast majority of the time, nobody bothers to check if there are issues...

I came across this old rant/plea from 2014 around audiophile fuses and reviewers:

https://www.audiocircle.com/index.php?topic=127160.0

Certainly the idea of having honest reviews and "reclaiming our hobby" is not new.

The great thing about the Internet is that we're provided with free speech. The bad thing is that we have uncurated free speech. Therefore, it is the duty of each of us to develop our critical thinking abilities.

IMO, do not look to the "pure subjectivist" audiophiles for assistance. That path of audiophilia I also believe is doomed to further marginalization and recognized to be in general, prone to dishonesty when they speak about sound quality, but it's the other stuff like making Industry happy, $$$ monetized, and the number of subscribers that they're gathering as the true goal.