While this blog is primarily about audiophile stuff, over the years I have discussed modern audiophile systems including thoughts on networks. Like it or not, computer audio/video is ubiquitous and probably has become the primary source of access for many of us these days (rather than deal with physical media). Furthermore, a fast, responsive, reliable home network is almost essential as a foundational "utility" for entertainment and work. As discussed back in 2018, I've been running 10 gigabit/s home ethernet (standard copper RJ45 Cat-5e/6) with an update to the ASUS router and QNAP switch infrastructure in 2021.

In 2022, when it comes to very high-speed computer network cards, the

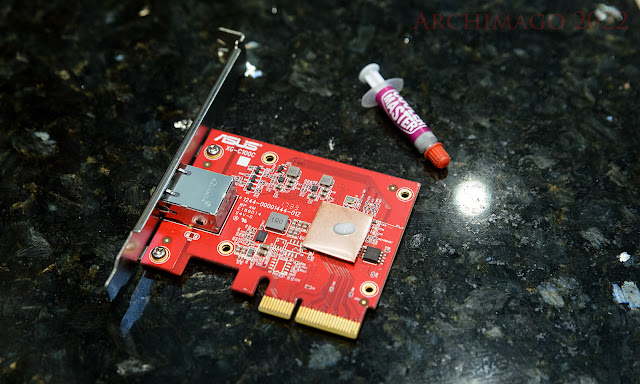

Aquantia/Marvell AQtion AQC107 chipset devices remain attractive due to reasonable price, PCIe 3.0 x4 compatibility, and ability to run at 2.5/5 gigabit intermediate speeds (also dropping down to 1Gbps and 100Mbps as needed of course). Over the years, I've seen comments online with frustrations around the popular cards like my

ASUS XG-C1001C shown above. The issues are typically described as episodic disconnects usually for about 5 seconds (discussed

here,

here) and then coming back online. As you can imagine, this will lead to a time-out of network transmissions.

Depending on the application, this might or might not lead to problems. For audio and videophiles, this is a problem when we're streaming video to something like an

AppleTV or playing music off Roon in real-time. Other apps may be more tolerant and can just wait a little longer and retry.

Various users have reported the cause of troubles originating from all kinds of issues like RF interference, to power line disruptions, to network cable issues. Since we are talking about very fast 10 gigabit/s transmissions, I suspect all these things could be to blame. While I've generally been fortunate, I have noticed these occasional disconnection issues showing up on my Windows Server 2019 computer as warnings a few times over 24 hours, seemingly randomly.

|

| Example of AQC107 "network link lost" where it says "Warning aqnic640" shown in Windows Server 2019 events log. Often it happens in a cluster like this around 6:45PM randomly. Notice the previous disruption was four hours earlier at 2:43PM. |

So for those who are experiencing this problem, here's what I did for both my Windows 11 Workstation and Windows Server 2019 machines I'm running

ASUS XG-C100C cards on:

1. Update the NIC to the latest Marvell software: 1.8.0_3.1.121a firmware and

3.1.6 driver in Windows.

Go here and search in "Marvell Public Drivers", "Windows" 64-bits only, and "AQC107" part number.

For Windows Server 2019, the latest 3.1.6 drivers installed but for some reason could not identify the card, so I went on

Station-Drivers and got a slightly older

3.1.4 WHQL, installing the Windows 8.1 version works with the newer firmware under Server 2019.

2. Once you've done the above, go into the Device Manager and change a few of the Advanced settings including turning off power savings for the NIC like so:

Note that this is assuming you already know your network functions well at 10Gbps (ie. you have no issues 99.99% of the time!) and all you want is extra stability. These settings will force the NIC to only connect at 10Gbps, maintain the connection at full duplex, thus forcing auto negotiation to turn off. Turn off the Energy-Efficient Ethernet feature which is known to cause issues with some IP communications like

Dante. Also, the "Recv Segment Coalescing" feature has been

noted by folks to lead to episodic disconnects (I turn off both the IPv4 and IPv6 options), it also doesn't improve transfer speed in my system nor decreased CPU load.

If your network cabling is older or know you have inevitable issues at 10GbE, feel free to limit the link speed to 5 or 2.5GbE full duplex for stability.

Here's a rundown of some other settings in the "Advanced" tab:

Downshift retries: Disabled

Energy-Efficient Ethernet: Disabled

Flow Control: Disabled (usually unneeded overhead, and controversial benefit)

Interrupt Moderation: Enabled

Interrupt Moderation Rate: Adaptive

IPv4 Checksum Offload: Rx & Tx Enabled

Jumbo Packet: Disabled (make sure all devices compatible before use!)

Large Send Offload (all IPv4 and IPv6 options): Enabled

Receive Buffers: 2000 (max 4096 in multiples of 8)Maximum number of RSS Queues: 8 (correlate to number of CPU cores)

Receive Side Scaling: Enabled

Recv Segment Coalescing (IPv4 and IPv6): Disabled

TCP/UDP Checksum Offload (IPv4 and IPv6): Rx & Tx Enabled

Transmit Buffers: 4000 (max 8184 in multiples of 8)Log Link State Event: Disabled

[Regarding the buffer sizes and Jumbo Packets - see Addenda below if you're using Roon!]

Obviously some of the settings like receive/transmit buffers can be optimized for your situation; I've noticed best speed using the middle buffer sizes on my machines rather than max'ing out. "Interrupt Moderation" reduces the interrupt request frequency especially with high-speed networking, improving CPU efficiency. Feel free to adjust to "Low" or even "Disabled" if you need lower network latency (doing this along with lowering buffer sizes might be useful for gaming, will not affect audio streaming quality).

If you have a managed switch, you can also set it to lock at the same speed (ie. 10Gbps) as well. This is what I've done with my

QNAP QSW-M2108-2C (port 10 is my Windows Server, port 9 connects to the upstairs Workstation):

|

| Lock switch speed to 10GbE full duplex. |

3. Now run some torture tests to see if stability is maintained (see below for more).

4. Still running into issues? Consider if the NIC could be overheating.

I noticed one of my two ASUS cards seemed to disconnect much more often than the other; the firmware, driver, and settings updates above did not stop the problem. If you still have issues especially if seeing disconnects during heavy data transfers on warm days, maybe it's heat. The AQC107 chip is small and notice that these passively cooled cards have relatively large heatsinks and during use, they warm up quite a bit.

So I took the problematic card out for an examination. I noticed that the thermal interface doesn't seem all that robust with the ASUS XG-C1001C. We can certainly improve it by getting our hands a little dirty. Let's take the heatsink off (easily done, remove the 4 small screws) for a look:

Notice that all we have here is a soft, thick thermal pad over the chip surface (1.2cm x 1.4cm). Remember a major lesson from Computer Repair 101 - "Only use a thin layer of thermal compound!" Let's scrape that stuff off and use some alcohol for a clean look at the chip:

|

| Aquantia/Marvell AQC107 shiny and clean... |

Notice the space where the thick thermal pad was. I really wonder how well heat was being transferred across the material and whether over time, the conduction ability may have deteriorated. Well, that obviously won't do! Let's get some physical metal contact for decent conductivity.

Here's a side look with proper heat transfer interface.

|

| Copper plate used as spacer to improve heat conduction/spread. Make sure the electrically conductive copper sheet isn't touching other components. |

Now that's more like it! Good physical contact, make sure not to overtighten the four small screws keeping the heatsink in place. It has been said that the

AQC107 running at 5Gbps uses 3W, likely pushing something like 6W running at 10Gbps. Even with the improved heat conduction, because this is a passively cooled card, make sure your computer has reasonably good airflow inside (maybe install the card close to a fan, or even strap on a

40mm fan).

To test, we can copy data across the 10GbE network and should see stable, high-speed transfers (477MB/s limited by SSD speed across SAMBA network):

|

| Real-life 50GB directory copy across 10GbE. |

Or use

iPerf 3 and have a look at maximum transfer speed from the Windows Server 2019 to my Windows 11 Workstation across QNAP and Netgear switches over 7200 seconds (2 hours):

|

| Performance test across network with managed QNAP QSW-M2108-2C and unmanaged Netgear GS110MX switches in the path. Windows 11 Workstation: iperf3.exe -s -i 60. Windows Server 2019: iperf3.exe -c 192.168.1.10 -t 7200 -P4 -i 60 -l 128k. (192.168.1.10 is the static address I keep my Workstation computer at.) See Addendum on update with newer version iPerf3, single stream without "-P4" option. |

Excellent, almost 9.5Gbps average sustained speed with less than 10% CPU (

Intel i7-7700K) utilization while running the network at full throttle over 2 hours while streaming music over Roon, web server running in the background, and some blog editing. Since I don't foresee ever running the network at maximum speed for 2 hours straight, this seems to be a reasonable "torture test".

As you can see with the last few minutes of iPerf data, the transfer rate stayed consistently above 9.4Gbps. Assuming you're not doing a lot of background data transfer, if you see the average value bouncing around especially dipping below 9Gbps, this could be a sign of overheating or poor cable quality.

Also, while running the iPerf test, I can look at my

QNAP switch port stats to see if it detects errors:

|

| Errors do show up over time, I cleared the data before running the iPerf test. |

Nice, at least at the level of this switch (again, 10GbE port 9 heading upstairs to my Workstation, port 10 connects directly to the Server), after

more than 7.25 trillion bytes transmitted between the two computers, zero CRC and FCS (

Frame Check Sequence) issues detected. And this is at almost 10 times the speed of the typical 1 gigabit ethernet found in most homes these days.

Hopefully this combination of firmware/driver update, hardware hack, and setting changes helps if you're also having issues. The

Aquantia AQC107 cards are inexpensive 10GbE NICs so while I don't expect them to be as stable as enterprise solutions, I think they can work reliably in the home environment. At least with the

ASUS XG-C1001C, the marginal thermal/heatsink interface can be improved.

Addendum:

Recently, I had a need for one more 10GbE card and got the

TP-Link TX401 (~US$100), also based on the AQC107 chip. Note the slightly longer heatsink compared to the ASUS:

.jpg) |

| Cards are about same height with ASUS a little wider, TP-Link heat sink longer and probably radiates heat better with the fins. |

Nice that TP-Link has included the half-height bracket and good 1.5m length of Cat-6A

STP ethernet cable.

I updated the firmware and drivers to the Marvell OEM as above. Speed-wise it measured the same as the ASUS and out of the box, seemed quite stable already running at 10GbE for a few days now. The TP-Link NIC is named generically in Device Manager as "Marvell AQtion 10Gbit Network Adapter" and I see it has an extra Aquantia diagnostics panel which I had not seen before with the ASUS card:

Hmmm. Curious if anyone has seen this diagnostics panel with the ASUS AQC107 card or if you know how to activate this.

--------------------

|

"DALL-E, show me a photo of an audiophile ethernet switch with a glass of snake oil sitting to the right side, on a table with a blue background."

Pretty cool that the algorithm drew me an ethernet switch with LED indicator (sound setting "C"?) and even a VU meter to indicate the peak awesomeness of the audiophile data passing thru. Maybe this switch can be used to "authenticate" the sound. ;-) |

A final note to audiophiles.

Yes, networks can be complex systems especially when we mix-and-match various brands and multiple devices. Like it or not, modern technologically-based hobbies demand that we understand a thing or two about how to set things up properly and fix problems when they arise. Make sure to understand the technology enough so you don't get caught up in fear, uncertainty, and doubt; unfortunate factors IMO driving some of the beliefs and desires of consumers in this hobby encouraged by unscrupulous companies. (Unfortunately, this potential for certain consumers to become technologically embarrassed thus giving in to FUD can be seen on display in many audiophile reviews in magazines and online.)

It's not difficult to get networks functioning; products come with manuals to follow, and these days, there are

a number of forums where one can get good technical help! However, the audio Industry continues to suggest that

thousands of dollars spent on ridiculous ethernet cables could make a difference, and some still tout the inexplicable benefits of basically marked up ethernet switches from small companies

like these (of course, I still would much prefer the

NetGear Nighthawk S8000 if one can find one :-). And some still claim that otherwise bit-perfect, ethernet packet-based asynchronous

data transmission can result in different sound qualities (yet they never can provide evidence for such beliefs).

It doesn't take much of a search to find

examples such as this shilling of the Synergistic Ethernet Switch UEF (US$2300, only 5 ports) to improve the sound with "proper harmonic structure and tone". Obviously, this kind of review makes no sense (here's an

even worse review of the Synergistic stuff). There's not even any reasonable putative mechanism for such a sonic change being offered by these people. Looking at these reviews and the

Synergistic website, I can't even figure out something as basic as what speed the ethernet switch operates at! Can it even handle 1 gigabit speed?

Speaking of speed, it's amazing that many of these companies are only offering 100Mbps devices like the

JCAT M12 Switch Magic for a mere €2550. Looks to me like they're just milking higher margins on old technology while hyping the novelty of using atypical

M12 connectors ("used in railway in Japan", and factory automation - so what?)!

Not surprisingly, the moment anything is described as "audiophile", prices increase at least 100%. Stuff like the

JCAT Net Card XE (€800) is based on the

Intel i350 gigabit chip released in 2014, with unsubstantiated benefits of linear power upgrades and OCXO clocking (BTW, you can get a dual-port

i350 NIC for about US$100). Or for the even more esoteric computer audiophile, how about the M.2-interfaced

SOtM sNI-1G which is a relative steal at US$350 but increases to over US$1,000 with the "

sCLK-EX" clock upgrade.

Regarding that clock upgrade for a grand, seriously folks, what are we doing this for with an ethernet card where the signal is often sent tens (even hundreds) of feet from the server computer to your streamer where the data will be reassembled, processed, and then more than likely fed to the DAC asynchronously which has its own clock!? Even if the ethernet card or downstream switch has "femtosecond" levels of accuracy internally, this would have no meaningful effect on the DAC at the end unless you're saying that the clocking issue is so severe that it caused data errors!

Furthermore, what kind of noise are they worried would be coming out of the NIC and into the ethernet cable that would affect the audio streamer, and even affect the DAC (let's not even worry about the switch(es) that could be along the path)!? Please, let's have a look at the evidence. Are we sure those who hype this stuff honestly believe what's being sold? Could they be delusional? Or could some of them simply be dishonest scammers, consciously shilling for the companies? (Gasp

😱

!)

It would be nice to see some "truth in advertising" regulation governing companies like these in the audiophile cottage industry. But even if there were regulations, enforcement would be doubtful. The use of purely subjective opinions as "evidence" in audiophile magazines or online reviews have totally damaged the credibility of so much of the audiophile literature. Given the minefield of questionable hype and more-than-likely false claims, one really needs to tread carefully to determine what is valuable, and what must be ignored. Good luck audiophiles.

Speaking of "evidence", I see some companies like the

Silent Angel folks have now included measurements like eye diagrams in their product descriptions

like this for the Bonn N8 switch (8-port, 1GbE, asking price US$550). Does anyone out there put faith into that 369ps vs. 705ps jitter difference

at the level of the ethernet switch having an effect on the sound quality coming from the downstream DAC?! Do not mistake these numbers for

DAC jitter values; not the same thing and no correlation. Even that "power source signal" comparison presumably from the 5V/2A

Forester F1 (US$600) power supply vs. maybe a generic switching wall-wart doesn't imply that the ethernet signal would be superior in any way using the expensive PS. While I appreciate that these Silent Angel boxes might be nicer looking, more reliable or better able to handle suboptimal network conditions compared to a much less expensive device, there's nothing here to suggest they "sound better" compared to any other bit-perfect ethernet switch. No, it's not true that "everything matters" when it comes to sound quality, and likewise not all measurable differences matter. The goal does involve gaining enough understanding, relevant experience, and wisdom to appraise the claims made so as to cut through rampant frivolous consumerism and pseudointellect in the audiophile hobby space.

[Don't forget that these days, high quality audio streaming through Logitech Media Server, Roon/RAAT, even UPnP/DLNA use TCP error-correcting data transfers which further ensure

bit-perfection.]

Hope you're enjoying the music, dear audiophiles, as we enter October...

Addendum:

Thanks

Etienne in the comments for the link to the

new bug-fixed iPerf builds. Here's using the latest 64-bit version 3.12, single stream "

iperf3.exe -c 192.168.1.10 -t 7200 -i 60 -l 128k" on my system:

|

| Receive buffers = 2000, Transmit buffers = 4000. As per settings above. |

Another addendum:

Turned on jumbo frames (9014 bytes setting in the NIC) for the Workstation and Server, switches support larger MTU. Doing this reduces the packet overhead, improving overall transmission speed, and reduced CPU cycles as well:

|

| Receive buffers = 2000, Transmit buffers = 4000. |

Not bad. About 0.4Gbps increase compared to standard 1500 byte payload with large transfers.

.jpg)

I would recommend against using the iperf3 Windows binary from iperf3.fr as it has a known bug where it uses overly small TCP window sizes, which can result in abnormally low speeds being reported especially for high bandwidth-latency product links such as 10 GbE. See https://github.com/esnet/iperf/issues/960. It's likely the reason why you felt the need to specify -P4: in a properly functioning network stack you should be able to achieve full bandwidth with a single stream. Your stack is probably fine, it's iperf3 that's the problem.

ReplyDeleteYou should use a known good Windows build of iperf3 instead. One good source is https://files.budman.pw/. I expect that with the correct build you will achieve 10 Gbit/s on a single stream (i.e. without -P4).

The administrator of iperf3.fr was informed more than 2 years ago that he's hosting broken binaries, see https://lafibre.info/iperf/lauteur-diperf3-a-propos-diperf-fr/ (in French), but never did anything about it. You're just the latest in a long line of victims, sadly.

Nice Etienne!

DeleteVery good. I'll update the link to the one you provided and indeed using just a single stream, I'm getting ~9.5Gbps...

Thanks.

Hi Archimago! It's been a long time since I've seen PCI-based network cards, these days they are either built-in into the motherboard or provided as a USB to Ethernet dongle. However, for achieving high speeds this likely make sense, until you buy a next generation MB which will have 10Gb Ethernet built into it.

ReplyDeleteRegarding audio transfer via networks, I think there might be confusion stemming from the fact that there are 2 major ways of sending it: non-realtime and realtime. The non-realtime way is indeed similar to copying files—the sender sends as much audio data as it can, the receiver receives it, verifies checksums, asks for retransmission of needed, finally it buffers verified data and plays through its DAC. This is how you are listening to albums from streaming services, and it indeed works great regardless of where the server is located and what the quality of network is in between (if it's notoriously bad, the receiver will just not be able to buffer enough data to play on time, and anyone will hear data as dropouts, it will not result in "loss of details").

In a realtime transfer, the sender actually sends the stream at the pace which corresponds to the sampling rate of the audio (there might be some buffering, but it's usually minimal). It can put a checksum on its data, however the receiver will not be able to ask for a retransmission, as it will take extra time, so only error correction is possible. Another problem is matching effective sampling rates, because the sender and the DAC of the receiver can be unsynchronized. In protocols like AirPlay, usually the receiver must resample the audio to match the sampling rate of its DAC, because the DAC is not "locked on" on the sender. This problem of freerunning clocks is solved in "pro" audio networks: AVB, Dante, Ravenna, etc. In these networks you select the master clock, and then all other devices lock onto it by using synchronization packets. This usually means that the network has to be under control, and AVB for example requires use of dedicated switches, definitely you can't use Internet :)

In short, problems of jitter still apply to realtime transfer, and modern equipment and protocols make sure that its effect are unnoticeable (I'm not sure about AirPlay though). Myself, I tried doing a simple test of sending a Dirac pulse train, with a pulse every 1 second, over a digital connection (it doesn't matter whether it's a classic AES, SPDIF, or a modern audio network standard), capturing it on the receiver, and checking whether the pulses are still aligned as expected. There may be surprises! Note that if resampling is involved, Dirac pulses will turn into sincs, and the received stream should be oversampled to see their "true peaks."

Thanks for the note Mikhail,

DeleteYeah, depending on the streaming protocol, we can certainly see variants in how "accurate" the data transfers are both in terms of temporal and error dimensions. This was why I mentioned specifically Roon, LMS, and DLNA as examples of systems using TCP in the transport layer which is error correcting (including packet retransfer), manages out-of-order packets, and implements flow control. Dropped data, out-of-order timing, and errors are more an issue with UDP streams which are more geared towards stuff like real-time gaming to reduce latency. With buffering on the end of the streaming endpoint then asynchronous transfer by USB to a DAC would all provide extra levels of jitter-reduction.

Streaming something like a Dirac pulse across the ethernet should not result in any alignment issues with good "audiophile" software like Roon. Sometimes, certain DACs implement muting if there is no data seen for awhile and I've had to be creative with complex streams that incorporate these impulses into them when measuring; at least with LMS, I have not found any issues and the asynchronous nature "fixes" jitter to the performance level of the DAC.

Yeah, if the devices implement resampling, for sure you'll see changes to the impulses... Other than for convenience streaming to my Zone 2 in the home (kitchen/living room), I have never really tested AirPlay to any extent. I see AirPlay and Sonos streaming more as basic consumer "commodity" audio so would not take them as "audiophile" grade options ;-) [both I believe internally resample when needed - AirPlay 48kHz, Sonos 44,1kHz].

Thanks for the reply Archimago! Just some clarifications:

Delete> UDP streams which are more geared towards stuff like real-time gaming to reduce latency

Also when there are multiple endpoints that need to play "in sync", like multi-room playback. I'm curious what does Roon do when it's asked to "group zones" (https://help.roonlabs.com/portal/en/kb/articles/faq-how-do-i-link-zones-so-they-play-the-same-thing-simultaneously#Open_The_Zone_Picker)

> if the devices implement resampling, for sure you'll see changes to the impulses

Change of the impulse itself is expected. However, change of the time distance between pulse peaks is less desired.

> AirPlay 48kHz

AirPlay uses 44.1 kHz, please see here https://en.wikipedia.org/wiki/AirPlay#Protocols. Makese sense since the majority of Apple Music content is in 44.1.

Thanks for the correction Mikhail,

DeleteFor some reason I had 48kHz in mind with AirPlay.

At the level of the analogue output, I don't think you'll find any issues with time distance between pulses, give it a try and let me know what you find!

I believe Roon has been using TCP for audio data transfers even though it has UDP open for device discovery for awhile now. There was a release about this from Roon back in 2017 (version 1.3) of their protocol:

https://community.roonlabs.com/t/roon-1-3-build-234-is-live/26719

Not sure about multi-zone streaming, presumably they're linking the device with the Roon Core each using TCP rather than a multicast UDP system. I'll play with that at some point and see. I know there are limits as to which devices can be zoned with each other (eg. only RAAT, only AirPlay, I can't seem to put both a RAAT and my Chromecast for example).

Archimago! Question about music servers!

ReplyDeleteI've used a Raspberry Pi/Logitech server set up for my digital music for years. Was very happy until it became more and more glitchy, and none of my endless trouble-shooting could totally fix things. So in a Hail Mary move I grabbed a Bluesound NODE music server. I set it up tonight, sending the signal via optical to my Benchmark DAC2L (then setting the volume output on the Bluesound NODE to "fixed" and I use my preamp for volume).

It worked like a dream in terms of ease of set up. Though I will have to transfer my burned CD library to an MS-DOS (FAT) formatted drive to work with the Bluesound. So it was just listening to my favorite internet radio stations for now, accessing TuneIn Radio from the BluOS controller app.

The thing is, it sounded sort of weird, different than I'm used to. It seemed to lack the body/solidity/presence/punch I normally hear. Sounded a bit "wispy" and insubstantial. Very puzzling. To test Airplay I dialed up those same stations, on the TuneIn app on my iphone, then sent that stream airplay to the Bluesound.

Then, boom, it sounded different - it had that more familiar density/presence/punch/solidity. Baffling. I double-checked that I was listening to the same 320 kbps MP3 streams on both the Airplay and the BluOS app, and kept switching the stream between them, and the Airplay consistently sounded different in the way I just described.

Now I am always aware of the power of imagination (as we've discussed many times here) so I certainly haven't ruled that out. But boy-oh-boy this seems really distinct (subtle for sure, but distinct). Does this make any sense at all? Before I rule it pure imagination, is there any technical reasons for why the sound could be different via Airplay? Thanks.

Hi Vaal,

DeleteInteresting observation! Glad that the Bluesound NODE is working out, hope not too much hassles with transferring the CD library over. Old FAT format? Hope you don't run into significant filename issues.

Assuming you're right that this is the exact same MP3 stream, there could be some variation due to:

1. Could MP3 decoding be done differently in TuneIn vs. BluOS? Obviously make sure there are no EQ or other settings you've adjusted in the apps.

2. Could AirPlay be changing the sound. For example, if the MP3 is encoded in 48kHz, is it possible that AirPlay is resampling it to 44.1kHz (as Mikhail noted above)?

Since MP3 decoding isn't a lossy process by any means and decoding could be different, the bits sent to your Benchmark are likely somewhat different between the 2.

Curious at the end of the day your final conclusion or if you find out what's happening!

Archimago, thanks for the suggestions. I'll take a look.

DeleteIt's either my imagination or there is an audible difference. If there is an audible difference then something is technically changing and I'm presuming it's something buried in settings somewhere.

When I'm perceiving something that I understand to be technically dubious, I like to do a blind test. Perhaps I'll try one.

"Speaking of "evidence", I see some companies like the Silent Angel folks have now included measurements..."

ReplyDeleteSpeaking of Silent Angel, one of their representatives was a member of a forum I'm a moderator of that's dedicated to music streaming technologies.

Anyway, he joined the forum and started promoting their products, and I politely asked him to provide us with a performance analysis. He went into this long monolog that was full of dazzling technical jargon, but that made absolutely no sense to anyone with actual electrical and/or computer engineering experience - that someone being a couple of other forum members and myself.

I showed him the door. I've a duty of care for our membership and zero tolerance confidence artists, and he seemed to be an obvious one.

Thanks for the note Art,

DeleteYou bring up an important point of the responsibilities as a forum moderator. Considering so much of what we learn these days in discussions happen on the forums, it's important for moderators to adhere to an honest code of conduct and truthfulness.

Good on you for showing him the door. IMO companies/individuals like these are just fishing for the gullible audiophiles; that has become their sad way of life. I agree that it's important to give them an opportunity to prove their case. And if they can't, then it's protective to not have their ideas stain a healthy forum!

Thanks for these great informations! Do you know if Asus V1 and V2 cards share the same chipset? Tho official firmware update on Asus website is just for V1 cards.

ReplyDeleteI was having mine in a Windows 11 box drop off the network every time Steam tried to download, it wouldn't come back without a reboot as it seemed to crash the whole network stack as even the on-board would no longer work.

ReplyDeleteUpdating the firmware so far seems to have fixed it, but I have no doubt its running hot as the same model card in my Linux box was clocking in at 83C when idle at 10Gbit link. Forcing EEE enabled dropped that to 77C.

Clearly that crappy heatsink and pad aren't intended for Aquantias specs of being able to run passive with a 55C case temp, lol. ACPI says my motherboard temp is only 28C. Yet I've had no obvious performance issues on that box.